Last week I spoke at SXSW Interactive 2015 in Austin, Texas. Here’s a slightly edited transcript:

A Most Productive Year

Well, hello again. I’ve actually talked about computation three times before at SXSW. And I have to say when I first agreed to give this talk, I was worried that I would not have anything at all new to say. But actually, there’s a huge amount that’s new. In fact, this has probably been the single most productive year of my life. And I’m excited to be able to talk to you here today about some of the things that I’ve figured out recently.

It’s going to be a fairly wild ride, sort of bouncing between very conceptual and very practical—from thousand-year-old philosophy issues, to cloud technology to use here and now.

Basically, for the last 40 years I’ve been building a big tower of ideas and technology, working more or less alternately on basic science and on technology. And using the basic science to figure out more technology, and technology to figure out more science.

I’m happy to say lots of people have used both the science and the technology that I’ve built. But I think what we’ve now got is much bigger than before. Actually, talking to people the last couple of days at SXSW I’m really excited, because probably about 3/4 of the people that I’ve talked to can seriously transform—or at least significantly upgrade—what they’re doing by using new things that we’ve built.

The Wolfram Language

OK. So now I’ve got to tell you how. It all starts with the Wolfram Language. Which actually, as it happens, I first talked about by that name two years ago right here at SXSW.

The Wolfram Language is a big and ambitious thing which is actually both a central piece of technology, and a repository and realization of a bunch of fundamental ideas. It’s also something that you can start to use right now, free on the web. Actually, it runs pretty much everywhere—on the cloud, desktops, servers, supercomputers, embedded processors, private clouds, whatever.

From an intellectual point of view, the goal of the Wolfram Language is basically to express as much as possible computationally—to provide a very broad way to encapsulate computation and knowledge, and to automate, as much as possible, what can be done with them.

I’ve been working on building what’s now the Wolfram Language for about three decades. And in Mathematica and Wolfram|Alpha, many many people have used precursors of what we have now.

But today’s Wolfram Language is something different. It’s something much broader, that I think can be the way that lots of computation gets done sort of everywhere, in all sorts of systems and devices and whatever.

So let’s see it in action. Let’s start off by just having a little conversation with the language, in a thing we invented 26 years ago that we call a notebook. Let’s do something trivial.

![]()

Good. Let’s try something different. You may know that it was Pi Day on Saturday: 3/14/15. And since we are the company that I think has served more mathematical pi than any other in history, we had a little celebration about Pi Day. So let’s have the Wolfram Language compute pi for us; let’s say to a thousand places:

![N[Pi, 1000] N[Pi, 1000]](https://content.wolfram.com/sites/43/2015/03/in2-sxsw-2015.png)

There. Or let’s be more ambitious; let’s calculate it to a million places. It’ll take a little bit longer…

![N[Pi, 10^6] N[Pi, 10^6]](https://content.wolfram.com/sites/43/2015/03/in3-sxsw-2015.png)

But not much. And there’s the result. It goes on and on. Look how small the scroll thumb is.

For something different we could pick up the Wikipedia article about pi:

![WikipediaData["Pi"] WikipediaData["Pi"]](https://content.wolfram.com/sites/43/2015/03/in4-sxsw-2015.png)

And make a word cloud from it:

![WordCloud[DeleteStopwords[%]] WordCloud[DeleteStopwords[%]]](https://content.wolfram.com/sites/43/2015/03/in5-sxsw-2015.png)

Needless to say, in the article about pi, pi itself features prominently.

Or let’s get an image. Here’s me:

![CurrentImage[] CurrentImage[]](https://content.wolfram.com/sites/43/2015/03/in6-sxsw-2015.png)

So let’s go ahead and do something with that image—for example, let’s edge-detect it. % always means the most recent thing we got, so…

![EdgeDetect[%] EdgeDetect[%]](https://content.wolfram.com/sites/43/2015/03/in7-sxsw-2015.png)

…there’s the edge detection of that image. Or let’s say we make a morphological graph from that image, so now we’ll make some kind of network:

![MorphologicalGraph[%] MorphologicalGraph[%]](https://content.wolfram.com/sites/43/2015/03/in8-sxsw-2015.png)

Oh, that’s quite fetching; OK. Or let’s automatically make a little user interface here that controls the degree of edginess that we have here—so there I am:

![Manipulate[EdgeDetect[CurrentImage[], r], {r, 1, 30}]" title="Manipulate[EdgeDetect[CurrentImage[], r], {r, 1, 30}] Manipulate[EdgeDetect[CurrentImage[], r], {r, 1, 30}]" title="Manipulate[EdgeDetect[CurrentImage[], r], {r, 1, 30}]](https://content.wolfram.com/sites/43/2015/03/in9-sxsw-2015.png)

Or let’s get a table of different levels of edginess in that picture:

![Table[EdgeDetect[CurrentImage[], r], {r, 1, 30}] Table[EdgeDetect[CurrentImage[], r], {r, 1, 30}]](https://content.wolfram.com/sites/43/2015/03/in10-sxsw-2015.png)

And now for example we can take all of those images and stack them up and make a 3D image:

![Image3D[%, BoxRatios -> 1] Image3D[%, BoxRatios -> 1]](https://content.wolfram.com/sites/43/2015/03/in11-sxsw-2015.png)

A Language for the Real World

The Wolfram Language has zillions of different kinds of algorithms built in. It’s also got real-world knowledge and data. So, for example, I could just say something like “planets”:

So it understood from natural language what we were talking about. Let’s get a list of planets:

![EntityList[%] EntityList[%]](https://content.wolfram.com/sites/43/2015/03/in13-sxsw-2015.png)

And there’s a list of planets. Let’s get pictures of them:

![EntityValue[%, "Image"] EntityValue[%, "Image"]](https://content.wolfram.com/sites/43/2015/03/in14-sxsw-2015.png)

Let’s find their masses:

![EntityValue[%%, "Mass"] EntityValue[%%, "Mass"]](https://content.wolfram.com/sites/43/2015/03/in15-sxsw-2015.png)

Now let’s make an infographic of planets sized according to mass:

![ImageCollage[% -> %%]" title="ImageCollage[% -> %%] ImageCollage[% -> %%]" title="ImageCollage[% -> %%]](https://content.wolfram.com/sites/43/2015/03/in16-sxsw-2015.png)

I think it’s pretty amazing that it’s just one line of code to make something like this.

Let’s go on a bit. This is where the internet thinks my computer is right now:

![]()

We could say, “When’s sunset going to be, at this position on this day?”

![Sunset[] Sunset[]](https://content.wolfram.com/sites/43/2015/03/in18-sxsw-2015.png)

How long from now?

![]()

OK, let’s get a map of, say, 10 miles around the center of Austin:

![GeoGraphics[GeoDisk[(=Austin), (=10 mile)]] GeoGraphics[GeoDisk[(=Austin), (=10 mile)]]](https://content.wolfram.com/sites/43/2015/03/in20-sxsw-2015.png)

Or, let’s say, a powers of 10 sequence:

![Table[GeoGraphics[GeoDisk[(=Austin), Quantity[10^n, "Miles"]]], {n, -1, 4}] Table[GeoGraphics[GeoDisk[(=Austin), Quantity[10^n, "Miles"]]], {n, -1, 4}]](https://content.wolfram.com/sites/43/2015/03/in21-sxsw-2015.png)

Or let’s go off planet and do the same kind of thing. We ask for the Apollo 11 landing site, and let’s show a thousand miles around that on the Moon:

![GeoGraphics[{Red, GeoDisk[First[(=apollo 11 landing site)], (=1000 miles)]}] GeoGraphics[{Red, GeoDisk[First[(=apollo 11 landing site)], (=1000 miles)]}]](https://content.wolfram.com/sites/43/2015/03/in22-sxsw-2015.png)

We can do all kinds of things. Let’s try something in a different kind of domain. Let’s get a list of van Gogh’s artworks:

And let’s take, say, the first 20 of those, and let’s get images of those:

![EntityValue[Take[%, 20], "Image"] EntityValue[Take[%, 20], "Image"]](https://content.wolfram.com/sites/43/2015/03/in24-sxsw-2015.png)

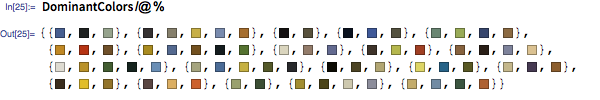

And now, for example, we can take those and say, “What were the dominant colors that were used in those images?”

And let’s plot those colors in a chromaticity diagram, in 3D:

![ChromaticityPlot3D[%] ChromaticityPlot3D[%]](https://content.wolfram.com/sites/43/2015/03/in26-sxsw-2015.png)

Philosophy of the Wolfram Language

I think it’s fairly amazing what can be done with just tiny amounts of Wolfram Language code.

It’s really a whole new situation for programming. I mean, it’s a dramatic change. The traditional idea has been to start from a fairly small programming language, and then write fairly big programs to do what you want. The idea in the Wolfram Language is to make the language itself in a sense as big as possible—to build in as much as we can—and in effect to automate as much as possible of the process of programming.

These are the types of things that the Wolfram Language deals with:

And by now we’ve got thousands of built-in functions, tens of thousands of models and methods and algorithms and so on, and carefully curated data on thousands of different domains.

And I’ve basically spent nearly 30 years of my life keeping the design of all of this clean and consistent.

It’s been really interesting, and the result is really satisfying, because now we have something that’s incredibly powerful—that we’re also able to use to develop the language itself at an accelerating rate.

Tweetable Programs

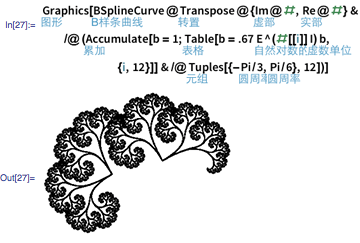

Here’s something we did recently to have some fun with all of this. It’s called Tweet-a-Program.

The idea here is you send a whole program as a tweet, and get back the result of running it. If you stop by our booth at the tradeshow here, you can pick up one of these little “Galleries of Tweetable Programs”. And here’s an online collection of some tweetable programs—and remember, every one of these programs is less than 140 characters long, and does all kinds of different types of things.

So to celebrate tweetable programs, we also have a deck of “code cards”, each with a tweetable program:

Computational Thinking for Kids

You know, if you look even at these tweetable programs, they’re surprisingly easy to understand. You can kind of just read the words to get a good idea how they work.

And you might think, OK, it’s like kids could do this. Well, actually, that’s true. And in fact I think this is an important moment for programming, where the same thing has happened as has happened in the past for things like video editing and so on: We’ve automated enough that the fancy professionals don’t really have any advantage over kids—now in learning programming.

So one thing I’m very keen on right now is to use our language as a way to teach computational thinking to a very broad range of people.

Soon we’ll have something we call Wolfram Programming Lab—which you can use free on the web. It’s kind of an immersion language learning for the Wolfram Language, where you see lots of small working examples of Wolfram Language programs that you get to modify and run.

I think it’s pretty powerful for education. Because it’s not just teaching programming: It’s immediately bringing in lots of real-world stuff, integrating with other things kids are learning, and really teaching a computational-thinking approach to everything.

So let’s take a look at a couple of examples. It’s been Pi Day; let’s look at Pi Necklaces:

The basic idea is that here’s a little piece of code—you can run it, see what it does, modify it. You can say to show the details, and it’ll tell you what’s going on. And so on.

And maybe we can try another example. Let’s say we do something a little bit more real-world… Where can you see from a particular skyscraper?

This will show us the visible region from the Empire State Building. And we could go ahead and change lots of parameters of this and see what happens, or you can go down and look at challenges, where it’s asking you to try and do other kinds of related computations.

I hope lots of people—kids and otherwise—will have fun with the explorations that we’ve been making. I think it’s great for education: a kind of mixture of sort of the precise thinking of math, and the creativity of something like writing. And, by the way, in the Programming Lab, we can watch programs people are trying to write, and do all kinds of education analytics inside.

I might mention that for people who don’t know English, we’ll soon be able to annotate any Wolfram Language programs in lots of other languages.

I think some amazing things are going to happen when lots more people learn to think computationally using the Wolfram Language.

Natural Language as Input

Of course, many millions of people already use our technology every day without any of that. They’re just typing pure natural language into Wolfram|Alpha, or saying things to Siri that get sent to Wolfram|Alpha.

I guess one big breakthrough has been being able to use our very precise natural language understanding, using both new kinds of algorithms and our huge knowledgebase.

And using all our knowledge and computation capabilities to generate automated reports for things that people ask about. Whether it’s questions about demographics:

Or about airplanes—this shows airplanes currently overhead where the internet thinks my computer is:

Or for example about genome sequences. It will go look up whether that particular random base-pair sequence appears somewhere on the human genome:

So those are a few sort of things that we can do in Wolfram|Alpha. And we’ve been covering thousands of different domains of knowledge, adding new things all the time.

By the way, there are now quite a lot of large organizations that have internal versions of Wolfram|Alpha that include their own data as well as our public data. And it’s really nice, because all types of people can kind of make “drive-by” queries in natural language without ever having to go to their IT departments.

You know, being able to use natural language is central to the actual Wolfram Language, too. Because when you want to refer to something in the real world—like a city, for example—you can’t be going to documentation to find out its name. You just want to type in natural language, and then get that interpreted as something precise.

Which is exactly what you can now do. So, for example, we would type something like:

![]()

and get:

![]()

And that would be understood as the entity “New York City”. And we could go and ask things like what’s the population of that, and it will tell us the results:

![New York City (city) ... ["Population"] New York City (city) ... ["Population"]](https://content.wolfram.com/sites/43/2015/03/in29-sxsw-2015.png)

Big Idea: Symbolic Programming

There’s an awful lot that goes into making the Wolfram Language work—not only tens of millions of lines of algorithmic code, and terabytes of curated data, but also some big ideas.

Probably the biggest idea is the idea of symbolic programming, which has been the core of what’s become the Wolfram Language right from the very beginning.

Here’s the basic point: In the Wolfram Language, everything is symbolic. It doesn’t have to have any particular value; it can just be a thing.

If I just typed “x” in most computer languages, they’d say, “Help, I don’t know what x is”. But the Wolfram Language just says, “OK, x is x; it’s symbolic”.

![]()

And the point is that basically anything can be represented like this. If I type in “Jupiter”, it’s just a symbolic thing:

Or, for example, if I were to put in an image here, it’s just a symbolic thing:

And I could have something like a slider, a user-interface element—again, it’s just a symbolic thing:

![]()

And now when you compute, you can do anything with anything. Like you could do math with x:

![]()

Or with an image of Jupiter:

![Factor[(jupiter)^10 - 1] Factor[(jupiter)^10 - 1]](https://content.wolfram.com/sites/43/2015/03/in35-sxsw-2015.png)

Or with sliders:

![Factor[Slider[]^10 - 1] Factor[Slider[]^10 - 1]](https://content.wolfram.com/sites/43/2015/03/in36-sxsw-2015.png)

Or whatever.

It’s taken me a really long time, actually, to understand just how powerful this idea of symbolic programming really is. Every few years I understand it a little bit more.

Language for Deployment

Long ago we understood how to represent programs symbolically, and documents, and interfaces, so that they all instantly become things you can compute with. Recently one of the big breakthroughs has been understanding how to represent not only operations and content symbolically, but also their deployments.

OK, there’s one thing I need to explain first. What I’ve been showing you here today has mostly been using a desktop version of the Wolfram Language, though it’s going to the cloud to get things from our knowledgebase and so on. Well, with great effort we’ve also built a full version of the whole language in the cloud.

So let me use that interface there, just through a web browser. I’m going to have the exact same experience, so to speak. And we can do all these same kinds of things just purely in the cloud through a web browser.

![Wolfram Programming Cloud: Graphics3D[Sphere[]] Wolfram Programming Cloud: Graphics3D[Sphere[]]](https://content.wolfram.com/sites/43/2015/03/wolfram-programming-cloud-in2-sxsw-2015.png)

![Wolfram Programming Cloud: Table[Rotate["hello",RandomReal[{0,2Pi}]],{100}] Wolfram Programming Cloud: Table[Rotate["hello",RandomReal[{0,2Pi}]],{100}]](https://content.wolfram.com/sites/43/2015/03/wolfram-programming-cloud-in3-sxsw-2015.png)

You know, in my 40 years of writing software, I don’t believe there’s ever been a development environment as crazy as the web and the cloud. It’s taken us a huge amount of effort to kind of hack through the jungle to get the functionality that we want. We’re pretty much there now. And of course the great news for people who just use what we’ve built is that they don’t have to hack through the jungle themselves, because we’ve already done that.

But OK, so you can use the Wolfram Language directly in the cloud. And that’s really useful. But you can also deploy other things in the language through the cloud.

Like, cat pictures are popular on the internet, so let’s deploy a cat app. Let’s define a form that has a field that asks for a breed of cat, then shows a picture of that. Then let’s deploy that to the cloud.

![]()

Now we get a cloud object with a URL. We just go there, and we get a form. The form has a “smart field”, that understands natural language—in this particular case, the language for describing cat breeds. So now we can type in, let’s say, “siamese”. And it will go back and run that code… OK. There’s a picture of a cat.

We can make our web app a little more complicated. Let’s add in another field here.

![CloudDeploy[FormFunction[{"cat" -> "CatBreed", "angle" -> Restricted["Number", {0, 360}]}, Rotate[Magnify[#cat["Image"], 2], #angle Degree] &, "PNG"]] CloudDeploy[FormFunction[{"cat" -> "CatBreed", "angle" -> Restricted["Number", {0, 360}]}, Rotate[Magnify[#cat["Image"], 2], #angle Degree] &, "PNG"]]](https://content.wolfram.com/sites/43/2015/03/in38-sxsw-2015.png)

Again, we deploy to the cloud, and now have a cat at an angle there:

OK. So that’s how we can make a web app, which we can also deploy on mobile and so on. We can also make an API if we want to. Let’s use the same piece of code. Actually, the easiest thing to do would be just to edit that piece of code there, and change this from being a form to being an API:

![CloudDeploy[APIFunction[{"cat" -> "CatBreed", "angle" -> Restricted["Number", {0, 360}]}, Rotate[Magnify[#cat["Image"], 2], #angle Degree] &, "PNG"]] CloudDeploy[APIFunction[{"cat" -> "CatBreed", "angle" -> Restricted["Number", {0, 360}]}, Rotate[Magnify[#cat["Image"], 2], #angle Degree] &, "PNG"]]](https://content.wolfram.com/sites/43/2015/03/in39-sxsw-2015.png)

And now the thing that we have will be an API that we can go fill in parameters to; we can say “cat=manx”, “angle=300”, and now we can run that, and there’s another cat at an angle.

So that was an API that we just created, that could be used by anybody in the cloud. And we can call the API from anywhere—a website, a program, whatever. And actually we can automatically generate the code to call this from all sorts of other languages—let’s say inside Java.

![EmbedCode[%, "Java"] EmbedCode[%, "Java"]](https://content.wolfram.com/sites/43/2015/03/in40-sxsw-20151.png)

So in effect you can knit Wolfram Language functionality right into any project you’re doing in any language.

In this particular case, you’re calling code in our cloud. I should mention that there are other ways you can set this up, too. You can have a private cloud. You can have a version of the Wolfram Engine that’s on your computer. You can even have the Wolfram Engine in a library that can be explicitly linked into a program you’ve written.

And all this stuff works on mobile too. You can deploy an app that works on mobile; even a complete APK file for Android if you want.

There’s lots of depth to all this software engineering stuff. And it’s rather wonderful how the Wolfram Language manages to simplify and automate so much of it.

The Automation of Programming

You know, I get to see this story of automation up close at our company every day. We have all these projects—all these things we’re building, a huge amount of stuff—that you might think we’d need thousands of people to do. But you see, we’ve been automating things, and then automating our automation and so on, for a quarter of a century now. And so we still only have a little private company with about 700 people—and lots of automation.

It’s fairly spectacular to see: When we automate something—like, say, a type of web development—projects that used to be really painful, and take a couple of months, suddenly become really easy, and take like a day. And from a management point of view, it’s great how that changes the level of innovation that you attempt.

Let me give you a little example from a couple of weeks ago. We were talking about what to do for Pi Day. And we thought it’d be fun to put up a website where people could type in their birthdays, and find out where in the digits of pi those dates show up, and then make a cool T-shirt based on that.

Well, OK, clearly that’s not a corporately critical activity. But if it’s easy, why not do it? Well, with all our automation, it is easy. Here’s the code that got written to create that website:

It’s not particularly long. Somewhere here it’ll deploy to the cloud, and there it’s calling the Zazzle API, and so on. Let me show you the actual website that got made there:

And you can type in your birthday in some format, and then it’ll go off and try and find that birthday in the digits of pi. There we go; it found my birthday, at that digit position, and there’s a custom-created image showing me in pi and letting me go off and get a T-shirt with that on it.

And actually, zero programmers were involved in building this. As it happens, it was just done by our art director, and it went live a couple of days ago, before Pi Day, and has been merrily serving hundreds of thousands of custom T-shirt designs to pi enthusiasts around the world.

Large-Scale Programs

It’s interesting to see how large-scale code development happens in the Wolfram Language. There’s an Eclipse-based IDE, and we’re soon going to release a bunch of integration with Git that we use internally. But one thing that’s very different from other languages is that people tend to write their code in notebooks.

They can put the whole story of their code right there, with text and graphics and whatever right there with the code. They can use notebooks to make structured tests if they want to; there’s a testing notebook with various tests in it we could run and so on:

And they can also use notebooks to make templates for computable documents, where you can directly embed symbolic Wolfram Language code that’ll get executed to make static or interactive documents that you can deliver as reports and so on.

By the way, one of the really nice things about this whole ecosystem is that if you see a finished result—say an infographic—there’s a standard way to include a kind of “compute-back link” that goes right back to the notebook where that graphic was made. So you see everything that’s behind it, and, say, start being able to use the data yourself. Which is useful for things like data publishing for research, or data journalism.

Internet of Things

OK, so, talking of data, a couple of weeks ago we launched what we call our Data Drop.

The idea is to let anything—particularly connected devices—easily drop data into our cloud, and then immediately make it meaningful, and accessible, to the Wolfram Language everywhere.

Like here’s a little device I have that measures a few things… actually, I think this particular one only measures light levels; kind of boring.

But in any case, it’s connected via wifi into our cloud. And everything it measures goes into our Data Drop, in this databin corresponding to that device.

![bin = Databin["3Mfto-_m"] bin = Databin["3Mfto-_m"]](https://content.wolfram.com/sites/43/2015/03/in41-sxsw-2015.png)

We’re using what we call WDF—the Wolfram Data Framework—to say what the raw numbers from the device mean. And now we can do all kinds of computations.

OK, it hasn’t collected very much data yet, but we could go ahead and make a plot of the data that it’s collected:

![DateListPlot[bin] DateListPlot[bin]](https://content.wolfram.com/sites/43/2015/03/in42-sxsw-2015.png)

That was the light level as seen by that device, and I think it just sat there, and the lights got turned on and then it’s been a fixed light level—sorry, not very exciting. We can just make a histogram of that data, and again it’s going to be really boring in this particular case.

![Histogram[bin] Histogram[bin]](https://content.wolfram.com/sites/43/2015/03/in43-sxsw-20151.png)

You know, we have all this data about the world from our knowledgebase integrated right into our language. And now with the Data Drop, you can integrate data from any device that you want. We’ve got a whole inventory of different kinds of devices, which we’ve been making for the last couple of years.

Once you get data into this Data Drop, you can use it wherever the Wolfram Language is used. Like in Wolfram|Alpha. Or Siri. Or whatever.

It’s really critical that the Wolfram Language can represent different types of data in a standard way, because that means you can immediately do computations, combine databins, whatever. And I have to say that just being able to sort of “throw data” into the Wolfram Data Drop is really convenient.

Like of course we’re throwing data from the My Pi Day website into a databin. And that means, for example, it’s just one line of code to see where in the world people have been interested in pi and generating pi T-shirts from, and so on.

![GeoGraphics[{Red, Point[Databin["3HPtHzvi"]["GeoLocations"]]}, GeoProjection -> "Albers", GeoRange -> Full, ImageSize -> 800] GeoGraphics[{Red, Point[Databin["3HPtHzvi"]["GeoLocations"]]}, GeoProjection -> "Albers", GeoRange -> Full, ImageSize -> 800]](https://content.wolfram.com/sites/43/2015/03/in43-sxsw-2015.png)

Some of you might know that I’ve long been an enthusiast of personal analytics. In fact, somewhat to my surprise, I think I’m the human who has collected more data on themselves than anyone else. Like here’s a dot for every piece of outgoing email that I’ve sent for the past quarter century.

But now, with our Data Drop, I’m starting to accumulate even more data. I think I’m already in the double digits in terms of number of databins. Like here’s my heart rate on Pi Day, from a databin. I think there’s a peak there right at the pi moment of the century.

![DateListPlot[Databin["3LV~DEJC", {DateObject[{2015, 3, 14, 7, 30, 0}], DateObject[{2015, 3, 15, 0, 0, 0}]}]["TimeSeries"]] DateListPlot[Databin["3LV~DEJC", {DateObject[{2015, 3, 14, 7, 30, 0}], DateObject[{2015, 3, 15, 0, 0, 0}]}]["TimeSeries"]]](https://content.wolfram.com/sites/43/2015/03/in45-sxsw-2015.png)

Machine Learning

So, with all this data coming in, what are we supposed to do with it? Well, within the Wolfram Language we’ve got all this visualization and analysis capability. One of our goals is to be able to do the best data science automatically—without needing to take data scientists’ time to do it. And one area where we’ve been working on that a lot is in machine learning.

Let’s say you want to classify pictures into day or night. OK, so here I’ve got a little tiny training set of pictures corresponding to scenes that are day or night, and I just have one little function in the Wolfram Language, Classify, which is going to build a classifier to determine whether a picture is a day or a night one:

![daynight = Classify[{(classifier set)}] daynight = Classify[{(classifier set)}]](https://content.wolfram.com/sites/43/2015/03/in46-sxsw-2015.png)

So there I’ve got the classifier. Now I can just apply that classifier to a collection of pictures, and now it will tell me, according to that classifier, are those pictures day or night.

![daynight[{ (6 images) }] daynight[{ (6 images) }]](https://content.wolfram.com/sites/43/2015/03/in47-sxsw-2015.png)

And we’re automatically figuring out what type of machine learning to use, and setting up so that you have a classifier that you can use, or can put in an app, or call in an API, or whatever, so that it is just one function to do this.

We’ve got lots of built-in classifiers as well; all sorts of different kinds of things. Let me show you a new thing that’s just coming together now, which is image identification. And I’m going to live dangerously and try and do a live demo on some very new technology.

I asked somebody to go to Walmart and buy a random pile of stuff to try for image identification. So this is probably going be really horrifying. Let’s see what happens here. First of all, let’s set it up so that I can actually capture some images. OK. I’m going to give it a little bit of a better chance by not having it have too funky of a background.

OK. Let us try one banana. Let’s try capturing a banana, and let us see what happens if I say ImageIdentify in our language…

OK! That’s good!

All right. Let’s tempt fate, and try a couple of other things. What’s this? It appears to be a toy plastic triceratops. Let’s see what the system thinks it is. This could get really bad here.

Oops. It says it’s a goat! Well, from that weird angle I guess I can see how it would think that.

OK, let’s try one more thing.

Oh, wow! OK! And the tag in the flower pot says the exact same thing! Which I certainly didn’t know. That’s pretty cool.

This does amazingly well most of the time. And what to me is the most interesting is that when it makes mistakes, like with the triceratops, the mistakes are very human-like. I mean, they’re mistakes that a person could reasonably make.

And actually, I think what’s going on here is pretty exciting. You know, 35 years ago I wanted to figure out brain-like things and I was studying neural nets and so on, and I did all kinds of computer experiments. And I ended up simplifying the underlying rules I looked at—and wound up studying not neural nets, but things called cellular automata, which are kind of like the simplest possible programs.

Mining the Computational Universe

And what I discovered is that if you look at that in the computational universe of all those programs, there’s a whole zoo of possible behaviors that you see. Here’s an example of a whole bunch of cellular automata. Each one is a different program showing different kinds of behavior.

Even when the programs are incredibly simple, there can be incredibly complex behavior. Like, there’s an example; we can go and see what it does:

Well, that discovery led me on the path to developing a whole new kind of science that I wrote a big book about a number of years ago.

That’s ended up having applications all over the place. And for example, over the last decade it’s been pretty neat to see that the idea of modeling things using programs has been winning out over the idea that’s dominated exact science for about 300 years, of modeling things using mathematical equations.

And what’s also been really neat to see is the extent to which we can discover new technology just by kind of “mining” this computational universe of simple programs. Knowing some goal we have, we might sample a trillion programs to find one that’s good for our particular purposes.

That purpose could be making art, or it could be making some new image processing or some new natural-language-understanding algorithm, or whatever.

Finally, Brain-Like Computing

Well, OK, so there’s a lot that we can model and build with simple programs. But people have often said somehow the brain must be special; it must be doing more than that.

Back 35 years ago I could get neural networks to make little attractors or classifiers, but I couldn’t really get them to do anything terribly interesting. And over the years I actually wasn’t terribly convinced by most of the applications of things like neural nets that I saw.

But just recently, some threshold has been passed. And, like, the image identifier I was showing is using pretty much the same ideas as 35 years ago—with a bunch of good engineering tweaks, and perhaps a nod to cellular automata too. But the amazing thing is that just doing pretty much the obvious stuff, with today’s technology, just works.

I couldn’t have predicted when this would happen. But looking at it now, it’s sort of shocking. We’re now able to use millions of neurons, tens of millions of training images and thousands of trillions of the equivalent of neuron firings. And although the engineering details are almost as different as birds versus airplanes, the orders of magnitude are pretty much just the same as for us humans when we learn to identify images.

For me, it’s sort of the missing link for AI. There are so many things now that we can do vastly better than humans, using computers. I mean, if you put a Wolfram|Alpha inside a Turing test bot, you’ll be able to tell instantly that it’s not a human, because it knows too much and can compute too much.

But there’ve been these tasks like image identification that we’ve never been able to do with computers. But now we can. And, by the way, the way people have thought this would work for 60 years is pretty much the way it works; we just didn’t have the technology to see it until now.

So, does this mean we should use neural nets for everything now? Well, no. Here’s the thing: There are some tasks, like image identification, that each human effectively learns to do for themselves, based on what they see in the world around them.

Language as Symbolic Representation

But that’s not everything humans do. There’s another very important thing, pretty much unique to our species. We have language. We have a way of communicating symbolically that lets us take knowledge acquired by one person, and broadcast it to other people. And that’s kind of how we’ve built our civilization.

Well, how do we make computers use that idea too? Well, they have to have a language that represents the world, and that they can compute with. And conveniently enough, that’s exactly what the Wolfram Language is trying to be, and that’s what I’ve been working on for the last 30 years or so.

You know, there’s all this abstract computation out there that can be done. Just go sample cellular automata out in the computational universe. But the question is, how does it relate to our human world, to what we as humans know about or care about?

Well, what’s happened is that humans have tried to boil things down: to describe the world symbolically, using language and linguistic constructs. We’ve seen what’s out there in the world, and we’ve come up with words to describe things. We have a word like “bird”, which refers abstractly to a large collection of things that are birds. And by now in English we’ve got maybe 30,000 words that we commonly use, that are the raw material for our description of the world.

Well, it’s interesting to compare that with the Wolfram Language. In English, there’s been a whole evolution over thousands of years to settle on the perhaps convenient, but often incoherent, language structure that we have. In the Wolfram Language, we—and particularly I—have been working hard for many many years keeping everything as consistent and coherent as possible. And now we’ve got 5,000 or so “core words” or functions, together with lots of other words that describe specific entities.

And in the process of developing the language, what I’ve been doing explicitly is a little like what’s implicitly happened in English. I’ve been looking at all those computational things and processes out there, and trying to understand which of them are common enough that it’s worth giving names to them.

You know, this idea of symbolic representation seems to be pretty critical to human rational thinking. And it’s really interesting to see how the structure of a language can affect how people think about things. We see a little bit of that in human natural languages, but the effect seems to be much larger in computer languages. And for me as a language designer, it’s fascinating to see the patterns of thinking that open up when people start really understanding the Wolfram Language.

Some people might say, “Why are we using computer languages at all? Why not just use human natural language?” Well, for a start, computers need something to talk to each other in. But one of the things I’ve worked hard on in the Wolfram Language is making sure that it’s easy not only for computers, but also for humans, to understand—kind of a bridge between computers and humans.

And what’s more, it turns out there are things that human natural language, as it’s evolved, just isn’t very good at expressing. Just think about programs. There are some programs that, yes, can easily be represented by a little piece of English, but a lot of programs are really awkward to state in English. But they’re very clean in the Wolfram Language.

So I think we need both. Some things it’s easier to say in English, some in the Wolfram Language.

Post-Linguistic Concepts

But back to things like image identification. That’s a task that’s really about going from all the stuff out there, in this case in the visual world, and finding how to make it symbolic—how to describe things abstractly with words.

Now, here’s the thing: Inside the neural net, one thing that’s happening is that it’s implicitly making distinctions, in effect putting things in categories. In the early layers of the net those categories look remarkably like the categories we know are used in the early stages of human visual processing, and we actually have pretty decent words for them: “round”, “pointy”, and so on.

But pretty soon there are categories implicitly being used that we don’t have words for. It’s interesting that in the course of history, our civilization gradually does develop new words for things. Like in the last few decades, we’ve started talking about “fractal patterns”. But before then, those kind of tree-like structures didn’t tend to get identified as being anything in particular, because we didn’t have words for them.

So our machines are going to discover a lot of categories that our civilization has not come up with. I’ve started calling these things a rather pretentious name: “post-linguistic emergent concepts”, or PLECs for short. I think we can make a metaframework for things like this within the Wolfram Language. But I think PLECs are part of the way our computers can start to really extend the traditional human worldview.

By the way, before we even get to PLECs, there are issues with concepts that humans already understand perfectly well. You see, in the Wolfram Language we’ve got representations of lots of things in the world, and we can turn the vast majority of things that people ask Wolfram|Alpha into precise symbolic forms. But we still can’t turn an arbitrary human conversation into something symbolic.

So how would we do that? Well, I think we have to break it down into some kind of “semantic primitives”: basic structures. Some, like fact statements, we already have in the Wolfram Language. And some, like mental statements, like “I think” or “I want”, we don’t.

The Ancient History

It’s a funny thing. I’ve been working recently on designing this kind of symbolic language. And of course people have tried to do things like this before. But the state of the art is actually mostly from a shockingly long time ago. I mean, like the 1200s, there was a chap called Ramon Llull who started working on this; in the 1600s, people like Gottfried Leibniz and John Wilkins.

It’s quite interesting to look at what those guys figured out with their “philosophical languages” or whatever. Of course, they never had an implementation substrate like we do today. But they understood quite a lot about ontological categories and so on. And looking at what they wrote really highlights, actually, what’s the same and what changes in the course of history. I mean, all their technology stuff is of course horribly out of date. But most of their stuff about the human condition is still just as valid as then, although they certainly had a lot more focus on mortality than we do today.

And today one interesting change is that we really need to attribute almost person-like internal state to machines. Not least because, as it happens, the early applications of all this everyday discourse stuff will be to things we’re building for people talking to consumer devices and cars and so on.

I could talk some about very practical here-and-now technology that’s actually going to be available starting next week, for making what we call PLIs, or Programmable Linguistic Interfaces. But instead let’s talk more about the big picture, and about the future.

The way I see things, throughout history there’s been a thread of using technology to automate more and more of what we do. Humans define goals, and then it’s the job of technology to automatically achieve those goals as well as possible.

A lot of what we’re trying to with the Wolfram Language is in effect to give people a good way to describe goals. Then our job is to do the computations—or make the external API requests or whatever—to have those goals be achieved.

What Will the AIs Do?

So, in any possible computational definition of the objectives of AI, we’re getting awfully close to achieving them—and actually, in many areas we’ve gone far beyond anything like human intelligence.

But here’s the point: Imagine we have this box that sits on our desk, and it’s able to do all those intelligent things humans can do. The problem is, what does the box choose to do? Somehow it has to be given goals, or purposes. And the point is that there are no absolute goals and purposes. Any given human might say, “The purpose of life is to do X”. But we know that there’s nothing absolute about that.

Purpose ends up getting defined by society and history and civilization. There are plenty of things people do or want to do today that would have seemed absolutely inconceivable 300 years ago. It’s interesting to see the complicated interplay between the progress of technology, the progress of our descriptions of the world—through memes and words and so on—and the evolution of human purposes.

To me, the path of technology seems fairly clear. The evolution of human purposes is a lot less clear.

I mean, on the technology side, more and more of what we do ourselves we’ll be able to outsource to machines. We’ve already outsourced lots of mechanical thinking, like say for doing math. We’re well on the way to outsourcing lots of things about memory, and soon also lots of things about judgment.

People might say, “Well, we’ll never outsource creativity.” Actually, some aspects of that are among the easier things to outsource: We can get lots of inspiration for music or art or whatever just by looking out into the computational universe, and it’s only a matter of time before we can automatically combine those things with knowledge and judgment about the human world.

You know, a lot of our use of technology in the past has been “on demand”. But we’re going to see more and more preemptive use—where our technology predicts what we will want to do, then suggests it.

It’s sort of amusing to me when people talk about the machines taking over; here’s the scenario that I think will actually happen. It’s like with GPSs in cars: Most people—like me—just follow what the GPS tells them to do. Similarly, when there’s something that’s saying, you know, “Pick out that food on the menu”, or “Talk to that person in the crowd”, much of the time we’ll just do what the machine tells us to—partly because the machine is basically able to figure out a lot more than we can.

It’s going to get complicated when machines are acting collectively across a whole society, effectively implementing in software all those things that political philosophers have talked about theoretically. But even at an individual level, it’s very complicated to understand the goal structure.

Yes, the machines can help us “be ourselves, but better”, amplifying and streamlining things we want to do and directions we want to go.

Immortality & Beyond

You know, in the world today, we’ve got a lot of scarce resources. In many parts of the world much less scarce than in the past, but some resources are still scarce. The most notable is probably time. We have finite lives, and that’s a key part of lots of aspects of human motivation and purpose.

It’s surely going to be the biggest discontinuity ever in human history when we achieve effective human immortality. And, by the way, I have absolutely no doubt that we will achieve it. I just wish more progress would get made on things like cryonics to give my generation a better probability of making it to that.

But it’s not completely clear how effective immortality will happen. I think there are several paths; that will probably in practice be combined. The first is that we manage to reverse-engineer enough of biology to put in patches that keep us running biologically indefinitely. That might turn out to be easy, but I’m concerned that it’ll be like trying to keep a server that’s running complex software up forever—which is something for which we have absolutely no theoretical framework, and lots of potential to run into undecidable halting problems and things like that.

The second immortality path is in effect uploading to some kind of engineered digital system. And probably this is something that would happen gradually. First we’d have digital systems that are directly connected to our brains, then these would use technology—perhaps not that different from the ImageIdentify function I was showing you—to start learning from our brains and the experiences we have, and taking more and more of the “cognitive load”… until eventually we’ve got something that responds exactly the same as our brain does.

Once we’ve got that, we’re dealing with something that can evolve quite quickly, independent of the immediate constraints of physics and chemistry, and that can for example explore different parts of the computational universe—inevitably sampling parts that are far from what we as humans currently understand.

Box of a Trillion Souls

So, OK, what is the end state? I often imagine some kind of “box of a trillion souls” that’s sort of the ultimate repository for our civilization. Now at first we might think, “Wow, that’s going to be such an impressive thing, with all that intelligence and consciousness and knowledge and so on inside.” But I’m afraid I don’t think so.

You see, one of the things that emerged from my basic science is what I call the Principle of Computational Equivalence, which says in effect that beyond some low threshold, all systems are equivalent in the sophistication of the computations they do. So that means that there’s not going to be anything abstractly spectacular about the box of a trillion souls. It’ll just be doing a computation at the same level as lots of systems in the universe.

Maybe that’s why we don’t see extraterrestrial intelligence: because there’s nothing abstractly different about something that came from a whole elaborate civilization as compared to things that are just happening in the physical world.

Now, of course, we can be proud that our box of a trillion souls is special, because it came from us, with our detailed history. But will interesting things be happening in it? Well, to define “interesting” we then need a sense of purpose—so things become pretty circular, and it’s a complicated philosophy discussion.

Back to 2015

I’ve come pretty far from talking about practical things for 2015. The way I like to work, understanding these fundamental issues is pretty important in not making mistakes in building technology here and now, because that’s how I’ve figured out one can build the best technology. And right now I’m really excited with the point we’ve reached with the Wolfram Language.

I think we’ve defined a new level of technology to support computational thinking, that I think is going to let people rather quickly do some very interesting things—going from algorithmic ideas to finished apps or new companies or whatever. The Wolfram Cloud and things around it are still in beta right now, but you can certainly try them out—and I hope you will. It’s really easy to get started—though, not surprisingly, because there are actually new ideas, there are things to learn if you really want to take the best advantage of this technology.

Well, that’s probably all I have to say right now. I hope I’ve been able to communicate a few of the exciting things that we’ve got going on right now, and a few of the things that I think are new and emerging in computational thinking and the technology around it. So, thanks very much.